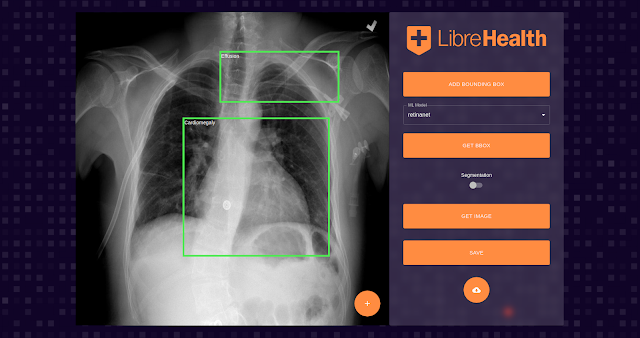

- Automatic labeling of Radiology Images (8/1/2020)

LibreHealth’s rockstar Google Summer of Code student, Kislay Singh has made excellent progress in integrating machine learning models which can perform object detection in radiology images into the LibreHealth Radiology project. This module needs further integration into the DICOM Image viewer, but the progress over the last 3 months of the summer is highly encouraging and provides good codebase to create the assemblage between radiologists and machine learning models for our human-AI studies in the future.

- Blood Glucose Prediction Challenge at KDH 2020 (6/12/2020)

Our paper titled “Blood Glucose Level Prediction as Time-Series Modeling using Sequence-to-Sequence Neural Networks” will be presented at the KDH 2020 workshop located at the European Conference on AI.

You can read the full paper here: Blood Glucose Level Prediction as Time-Series Modeling using Sequence-to-Sequence Neural Networks

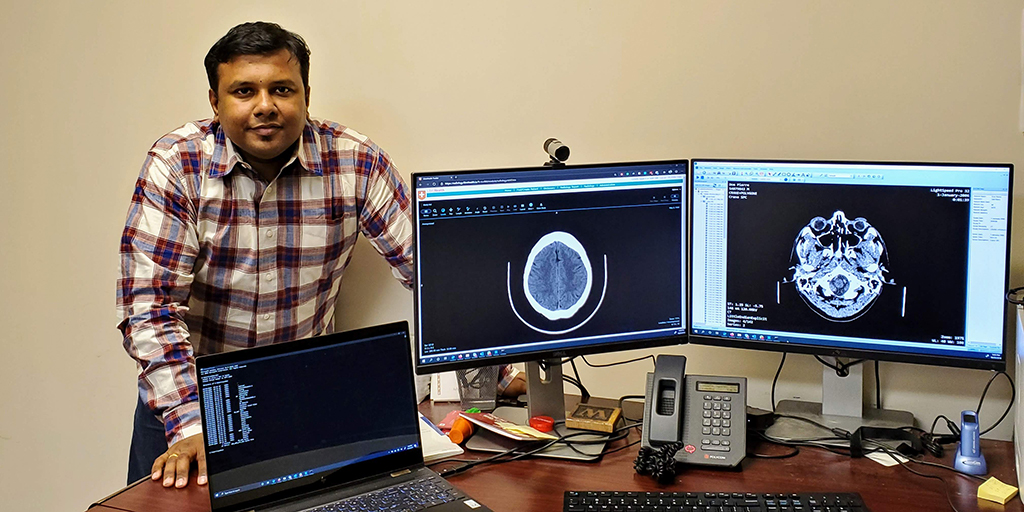

- NSF grant to study benefits of AI and radiologist co-learning (12/13/2019)

BioHealth Informatics Assistant Professor Saptarshi Purkayastha of the School of Informatics and Computing has received an $826,795 grant from the National Science Foundation, entitled “FW-HTF-RM: Measuring learning gains in man-machine assemblage when augmenting radiology work with artificial intelligence.”

Purkayastha is the principal investigator with co-PIs Elizabeth Krupinski of Emory University, Joshua Danish of the IU School of Education, and Judy Gichoya, M.D., formerly of Indiana University. Gichoya received her M.S. in health informatics from the School of Informatics and Computing and M.D. in radiology from IU School of Medicine before receiving a fellowship at the Dotter Department of Interventional Radiology at Oregon Health and Science University.

The three-year grant will look at artificial intelligence (AI) in a unique and novel way: as part of a man-machine assemblage. In the past, these would have been trained as separate entities—much like a self-driving car and driver—rather than learning and training together at the same time.

“We want to enable the synergy in a way that it does no patient harm and reduces burden on radiologists.”

Saptarshi Purkayastha, Dept. of BioHealth Informatics

The group has recruited 36 radiologists, ranging from the first-year residents to highly experienced, and will conduct the study at Emory. Purkayastha will perform the Electronic Health Record software tooling and Danish will evaluate the learning. Participants will view over 1600 images over two 6-month phases, and gains on tasks will be measured for groups with and without the assemblage training.

One of the top priorities at the NSF is to discover how human work will change with the introduction of AI. The results of this study may point to how people and AI can work better on any cognitive task when they are treated as one unit. “Most people believe that the future of radiology work will be augmented with artificial intelligence in some way, but we want to enable the synergy in a way that it does no patient harm and reduces the burden on radiologists,” says Purkayastha.

Acknowledgments

This material is based upon work supported by the National Science Foundation under Grant No. 1928481. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

This grant builds on the work performed as part of a STEM Education and Research Institute (SEIRI) Seed Grant from 2017-2019.